STAT 360 - Lecture 13

What we will cover today:

- The Mean of a Linear Combination of R.V.'s

- The Variance of a Linear Combination of R.V.'s

- What about Non-linear Combinations of R.V.'s?

- Bernoulli process

- Binomial and Multinomial Distributions

The Mean of a Linear Combination of R.V.'s

Recall the definitions for the expected value of a discrete random variable $X$ and a continuous random variable $Y$, respectively:

- $E[X] = \sum_x xf(x)$

- $E[Y] = \int_{-\infty}^{\infty} yf(y)dy$

Recall from Calculus the linearity properties of summations and integrals:

Unsurprisingly and easily proved (see textbook or try it yourself) are the following theorems:

- For $a,b$ constants, $E[aX + b] = aE[X] + b$

- $E[g(X) \pm h(X)] = E[g(X)] \pm E[h(X)]$

- $E[g(X, Y) \pm h(X, Y)] = E[g(X, Y)] \pm E[h(X, Y)]$

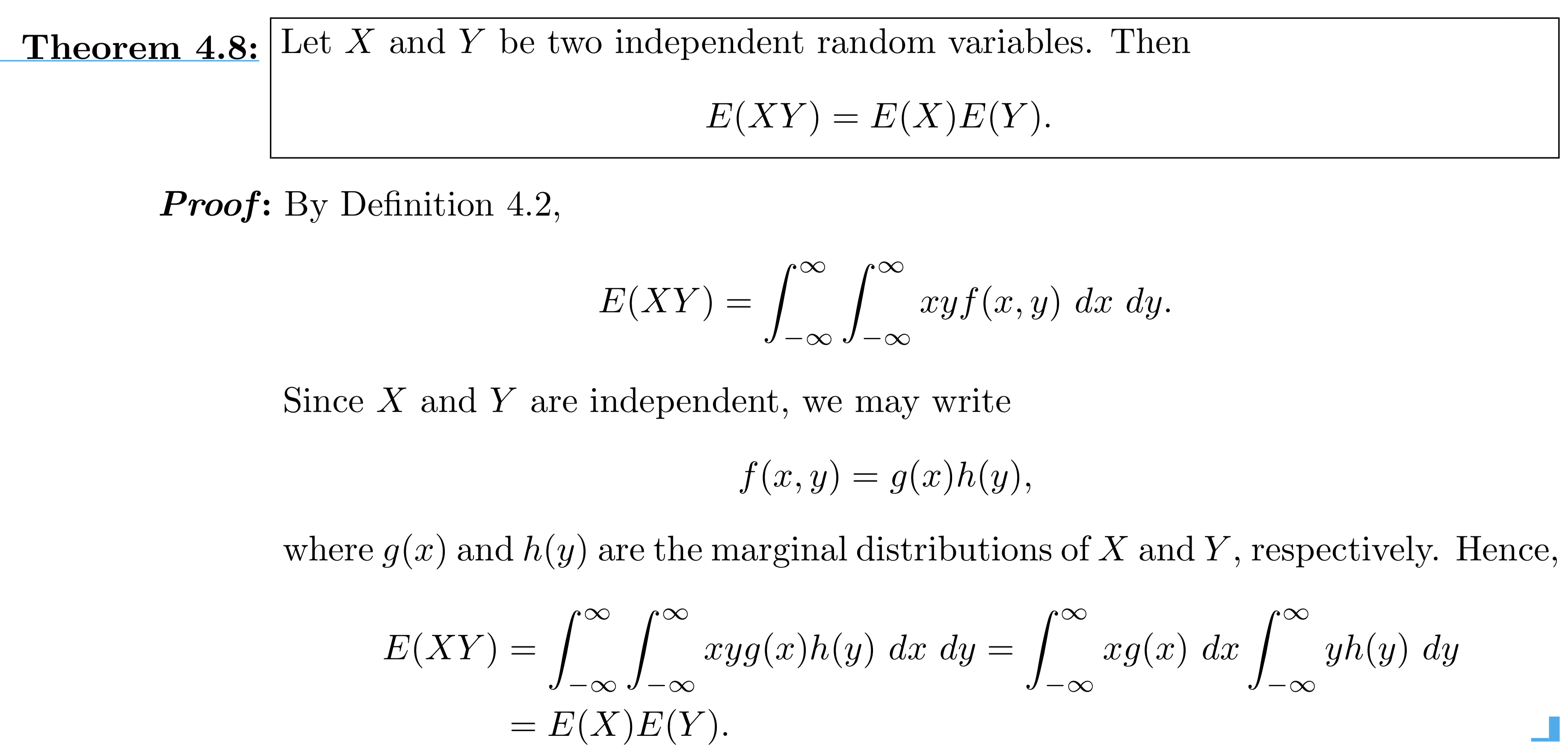

A little more interesting is Theorem 4.8:

Note: not a linear combination! The caveat is that $X$ and $Y$ must be independent.

Note: not a linear combination! The caveat is that $X$ and $Y$ must be independent.

Moving on to Variance...

Recall the definition of the variance of a random variable $X$:

$Var(X) = E[(X - E(X))^2]$

Theorem: $Var(aX + b) = a^2Var(X)$

Proof: Let $\mu = E[aX+b]$, then $Var(aX + b) = E[((aX+b)-\mu)^2]$

$=E[(aX+b)^2 - 2(aX+b)\mu + \mu^2]$

$=E[(aX+b)^2] - 2\mu^2 + \mu^2$

$=a^2E[X^2]+2abE[X]+b^2 - (aE[X]- b)^2$

$=a^2E[X^2]+2abE[X]+b^2 - (a^2(E[X])^2 -2abE[X] +b^2)$

$=a^2Var(X)$

Similarly, we can prove the more general theorem:

which your textbook details, but I recommend as an exercise to perform this proof on your own.

Recall that $cov(X,Y) = Var(XY) = E(XY) - E(X)E(Y)$

Now theorem 4.8 states that if $X$ and $Y$ are independent, then $E(XY) = E(X)E(Y)$

As we had suspected, if $X$ and $Y$ are independent, then their covariance is 0. However the converse is not the case!

And we get the more general corollary to theorem 4.9:

If $X_1, X_2, \dots, X_n$ are independent r.v.'s, then $Var(a_1X_1 + a_2X_2 + \dots +a_nX_n) =$ $a_1^2Var(X_1) + a_2^2Var(X_2) + \dots + a_n^2Var(X_n)$

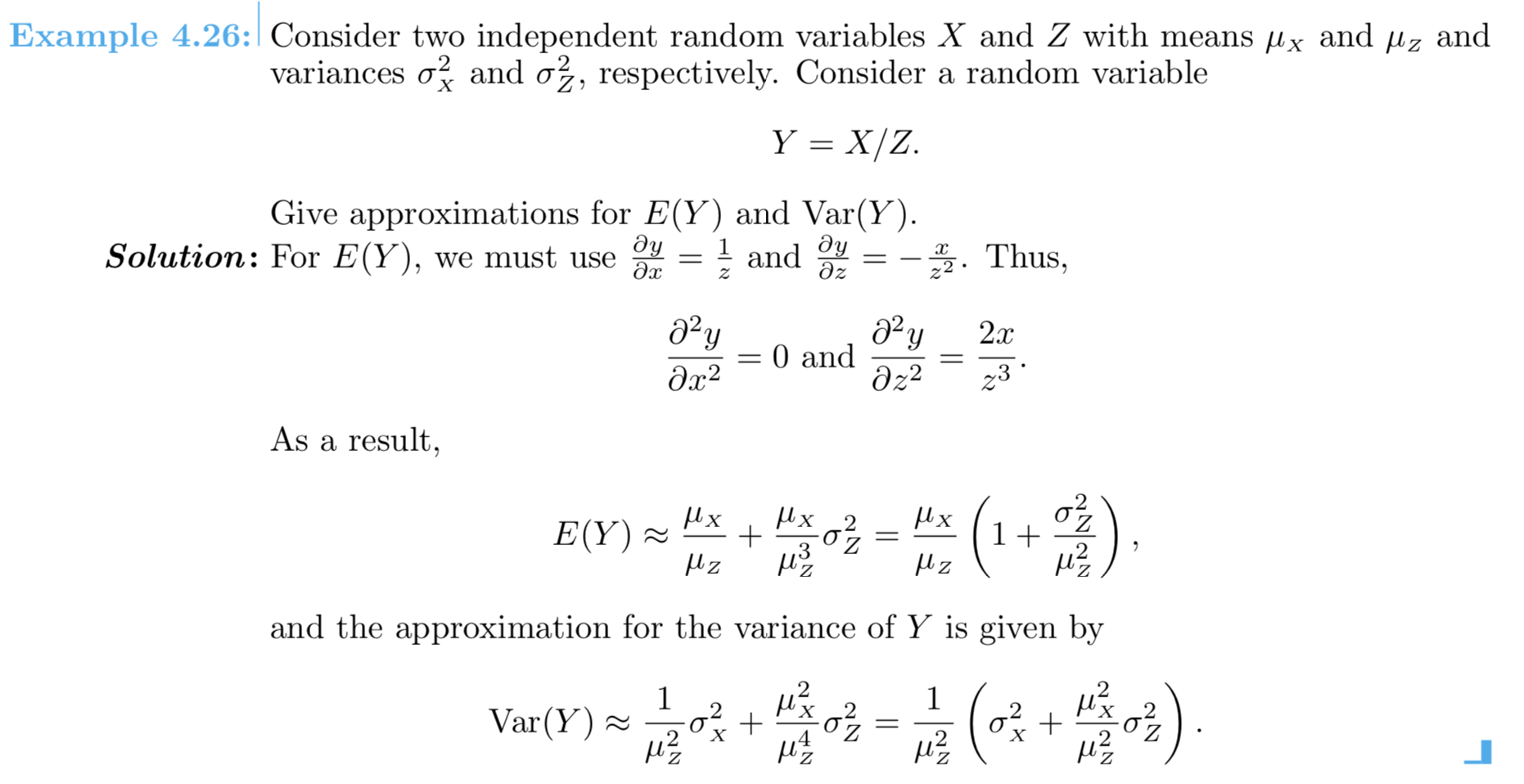

What if the function is nonlinear?

Bad news: no general rule for getting the exact expectation and variance of a random variable $Y = g(X)$ where $g$ is a nonlinear function.

Good news: we can use the Taylor series approximation of the nonlinear function $g$ centered at $\mu = E(X)$ to get the approximate expectation and variance of $g(X)$.

2nd Order Approximation of Expectation

$$E[g(X)] \approx g(\mu_X) + \left. \frac{d^2g(x)}{dx^2}\right\rvert_{x=\mu_X}\frac{Var(X)}{2}$$1st Order Approximation of Variance

$$Var[g(X)] \approx \left( \frac{dg(x)}{dx}\right)^2_{x=\mu_X}Var(X)$$

The Bernoulli Process:

- The experiment consists of a sequence of repeated trials.

- Each trial results in one outcome from two possible outcomes: success versus failure.

- The trials are independent, so the outcome on one trial does not affect another.

- The probability of success, $p$, remains constant from trial to trial.

Example 1

Consider the set of Bernoulli trials where 3 items are selected at random from a manufacturing process, inspected, and classified as defective or nondefective. A defective item is designated a success.

The number of successes is a random variable $X$ assuming integer values from 0 through 3. The eight possible outcomes and the corresponding values of $X$ are:

| Outcome | $NNN$ | $NDN$ | $NND$ | $DNN$ | $NDD$ | $DND$ | $DDN$ | $DDD$ |

| $x$ | 0 | 1 | 1 | 1 | 2 | 2 | 2 | 3 |

Does example 1 describe a Bernoulli process? Why or why not?

Example 2

The pool of prospective jurors for a certain case consists of 50 individuals, of whom 35 are employed. Suppose that 6 of these individuals are randomly selected one by one to sit in the jury box for initial questioning by lawyers for the defense and the prosecution. Label the $ith$ person selected (the $ith$ trial) as a success $S$ if he or she is employed and a failure $F$ otherwise.

Does example 2 describe a Bernoulli process? Why or why not?

What if we had access to 500,000 individuals, of whom 400,000 are employed, and had to sample only 10 of them?

So the Bernoulli process can be seen as either sampling with replacement from a small dichotomous population (e.g. head vs. tails from coin tosses),

Or as sampling without replacement from a dichotomous population of size $N$, such that the number of trials $n$ is at most 5% of the population size (e.g. picking 3 items from a large manufacturing assembly line in order to test for defective parts).

The Binomial Random Variable

The binomial random variable $X$ associated with a Bernoulli process (also known as binomial experiment) consisting of $n$ trials is defined as: $X \approx$ the number of $S$'s (successes) among the $n$ trials.The Binomial Distribution

If $X$ is a binomial random variable, it will depend on two important parameters: $n$ the number of trials and $p$ the probability of success.

We denote the p.m.f of a binomial r.v. by $b(x; n, p)$.

For example, consider tossing a biased coin with probability $p$ of showing $H$ead $= S$ four times:

Question: How many outcomes have 3 successes?

The Binomial p.m.f.

$$b(x; n, p) = {n \choose x}p^x(1-p)^{n-x},$$ where: $x = 0,1,2,...,n$,$n$ is the number of trials and

$p$ is the probability of success.

Example

Each of six randomly selected cola drinkers is given a glass containing cola S and one containing cola F. The glasses are identical in appearance except for a code on the bottom to identify the cola.

Suppose there is actually no tendency among cola drinkers to prefer one cola to the other, so that $p = P$(a selected individual prefers S) = 0.5. Let $X$ be the number among the six who prefer S, that is $X \sim Bin(6,0.5)$.

Compute $P(X = 3)$.

Compute $P(X \leq 5)$.

The Binomial C.D.F.

$$B(x; n, p) = P(X \leq x) = \sum_x b(i; n, p),$$ where: $x = 0,1,2,...,n$,$n$ is the number of trials and

$p$ is the probability of success.

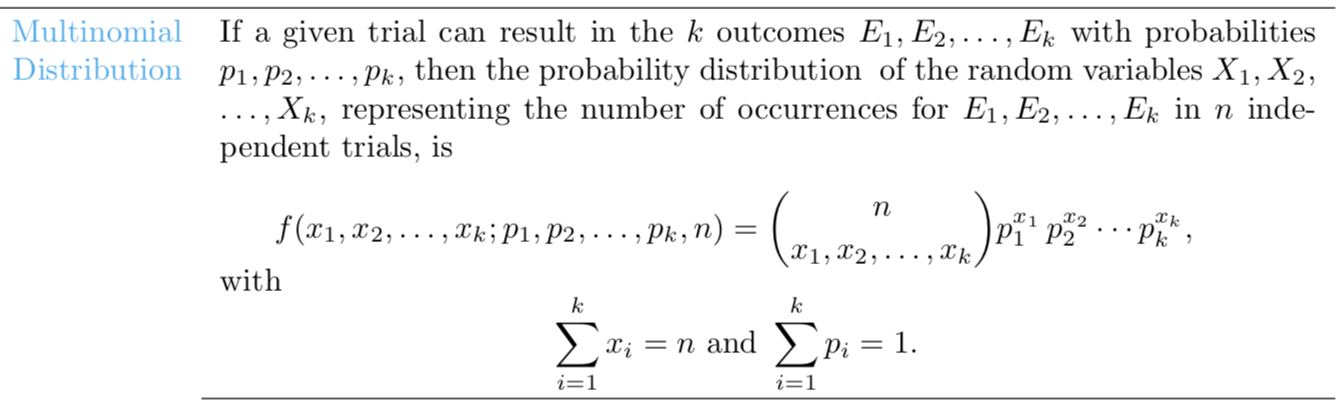

Also good to know about...

However, we will spend more time with the binomial today!

You can read more about the multinomial distribution here.

Mean and Variance of a Binomial R.V.

(then we will do some examples!)

Let $X \sim Bin(n,p)$, then $E(X) = np$, $Var(X) = np(1-p)$

Shape and Position

How do the parameters $n$ and $p$ affect the shape of the binomial distribution?

Computing Probabilities of Binomial R.V.'s

We have several ways!

- By hand

- With a calculator

- Using R, or other software

- Using a table (like $A.1$ on $pp. \ 726-727$)

Example and Homework

We will do these exercises in class: 5.11, 5.22