STAT 360 - Lecture 12

What we will cover today:

- What does it "mean"?

- Discrete Random Variable Mean

- Continuous Random Variable Mean

- Multivariate Expectation

- Variance: the spread of a distribution

- Covariance: Multivariate Variance

- The Correlation Coefficient

What does it "mean"?

The expected value or the expectation of a particular random variable signifies the mean of the population.

This is not the same as the average of a sample! Expectation would be the average one would get for the entire population given the probability density of each sample point.

Let's toss a 6-sided die many times. In the long run, what would be the average of our tosses?

361342165...Ok, so $\frac{1}{6}$ of the time we would get 1, $\frac{1}{6}$ of the time we would get 2, $\frac{1}{6}$ of the time we would get 3, and so on up to 6.

So we expect to get the weighted average of $\frac{1}{6}(1+2+3+4+5+6) = \frac{21}{6} = \frac{7}{2}$.

We are adding up the product between each value in the support of the random variable $X$ (in the previous example that is the face value of the die) and its weight (in the case on a unbiased die, each face has equal chance of showing up).

Discrete Random Variable Mean

Let $X$ be a discrete random variable with probability mass function $f(x)$, then the expectation of $X$ is:If an odd number comes up, you give me 2 $\times$ face value + 1 dollars. If an even number comes up, I give you (3 $\times$ face value - 1)/2. Let's play?

$\frac{1}{2}$ of the time you will lose...how much? $\frac{1}{6}((2*1+1)+(2*3+1)+(2*5+1)) = \frac{1}{6}(21)$ $ = \frac{7}{2}$ dollars

$\frac{1}{2}$ of the time you will win...how much? $\frac{1}{6}((3*2-1)/2+(3*4-1)/2+(3*6-1)/2) = \frac{1}{6}(\frac{33}{2})$ $ = \frac{11}{4}$ dollars

Sometimes we are not interested on the weighted mean of a r.v.'s support set values, but instead a function of those values.

Like the amount of dollars you can win from a game of chance, or the average number of minutes (modeled discretely) you have to wait for a bus as a function of the bus driver's caffeine level, or...

What are some other examples?

Discrete Random Variable Mean (more generally)

Let $X$ be a discrete random variable with probability mass function $f(x)$, and let $u(X)$ be a function whose domain is the support set of $X$. Then the expectation of $u(X)$ is:Continuous Random Variable Mean

(and let's just go straight to the more general case!)

Let $X$ be a continuous random variable with probability density function $f(x)$ and let $u(X)$ be a function whose domain is the support set of $X$. Then the expectation of $u(X)$ is:An Example

Suppose a train arrives shortly after 1:00PM each day, and that the number of minutes after 1:00 that the train arrives can be modeled as a continuous random variable with density:

$f(x) = 2(1-x)$ for $0 \leq x \leq 1$ and 0 otherwise.

Find the expectation of the number of minutes the train arrives after 1:00PM.

An Example

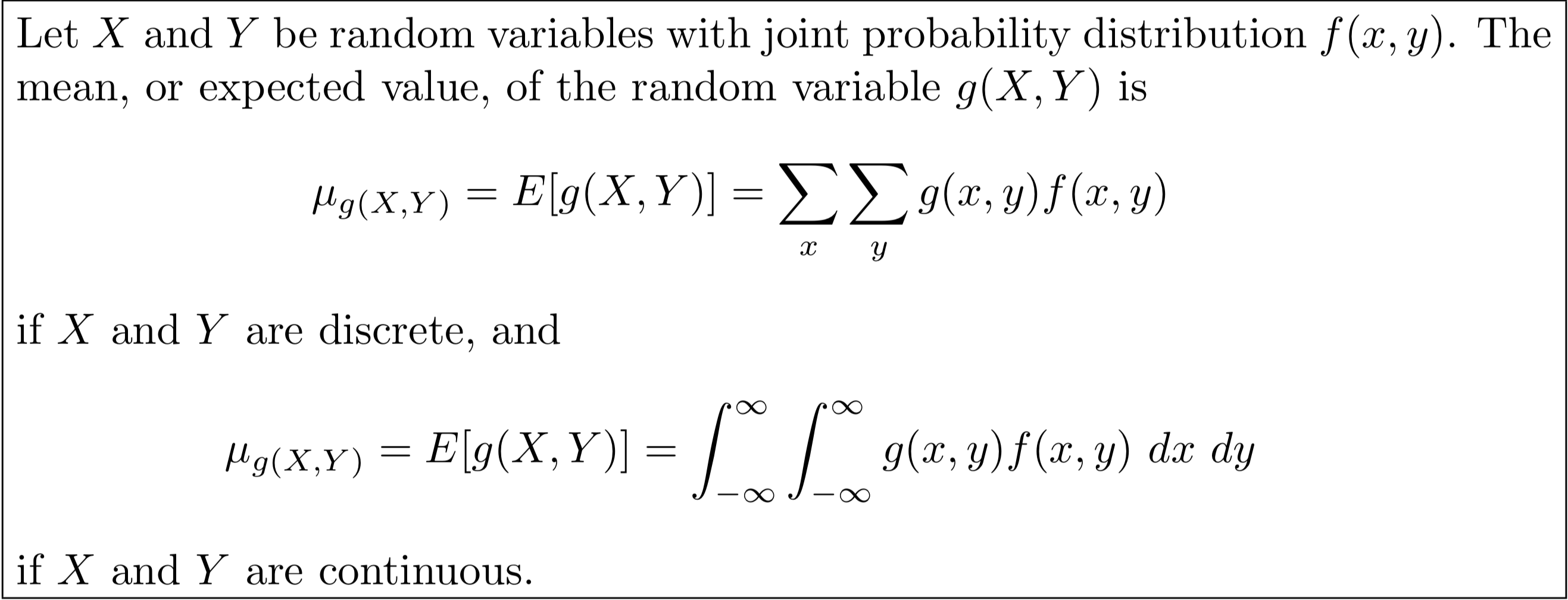

Multivariate Expectation

This is the expected value of a function of several random variables (which is also a random variable). The weights for computing the expectation will depend on the joint probability distribution of the random variables involved.

As stated in your book for both discrete and continuous random variables we have:

Example

Let $X$ and $Y$ have the pdf: $$ f(x,y) = 8xy, 0 < x < y < 1 $$ and 0 otherwise.

Find the expected value of $g(X,Y) = XY^2$.

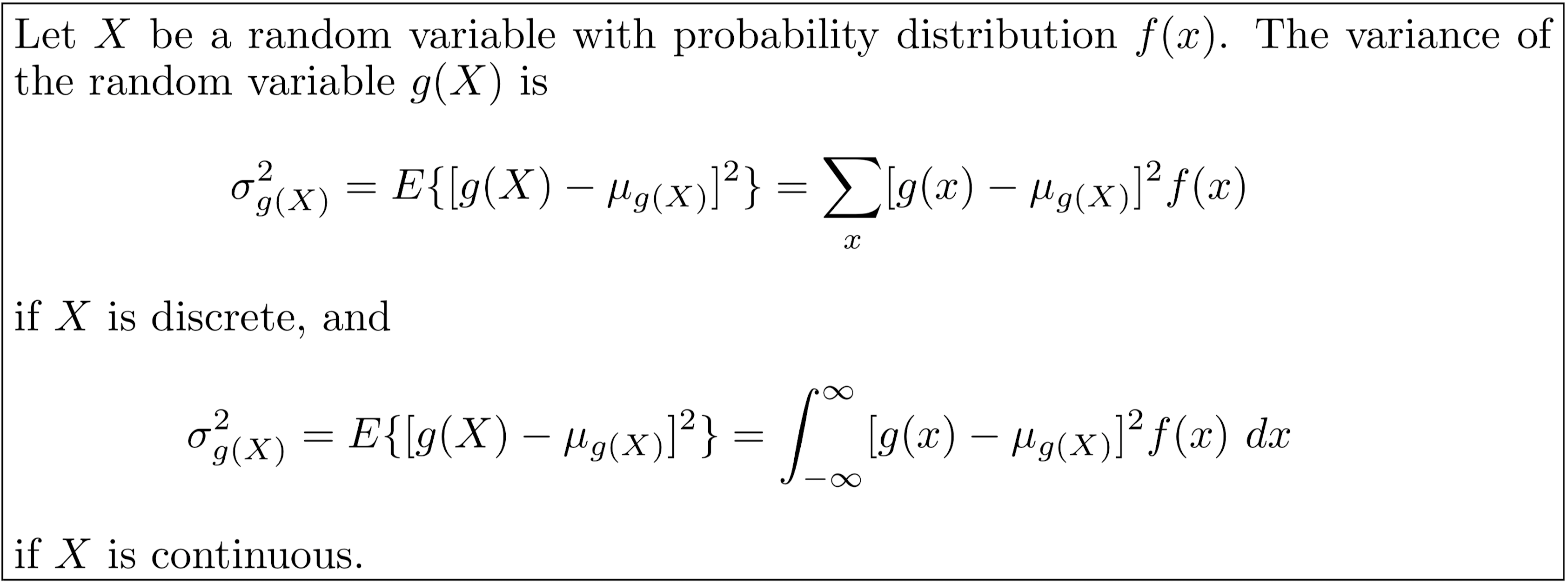

Variance: the spread of a distribution

Variance is the expectation of $u(X) = (X - \mu)^2$.

In simple English: variance is the average of the squared differences from the population mean.

Important:

Theorem 4.3:

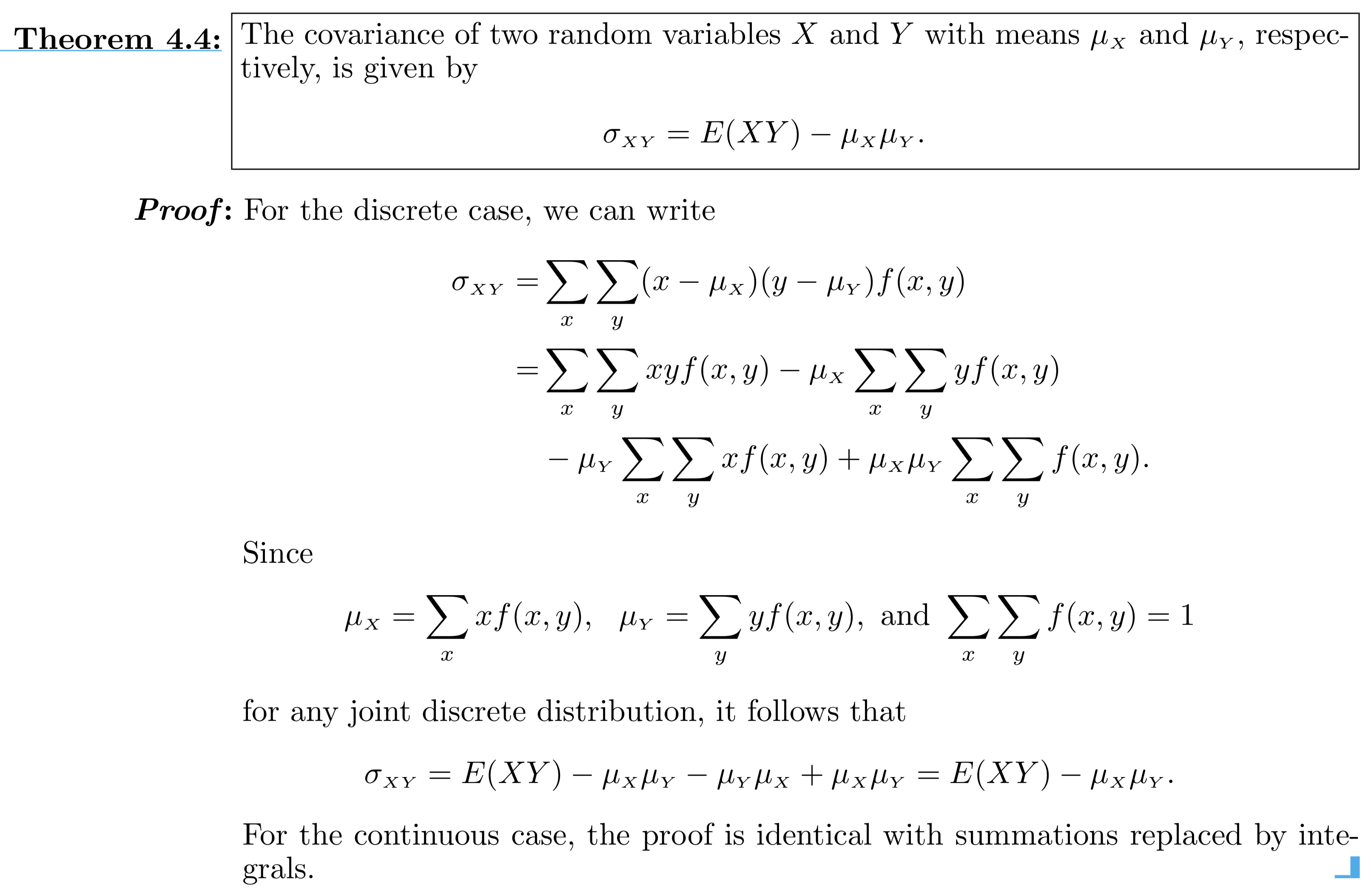

Covariance

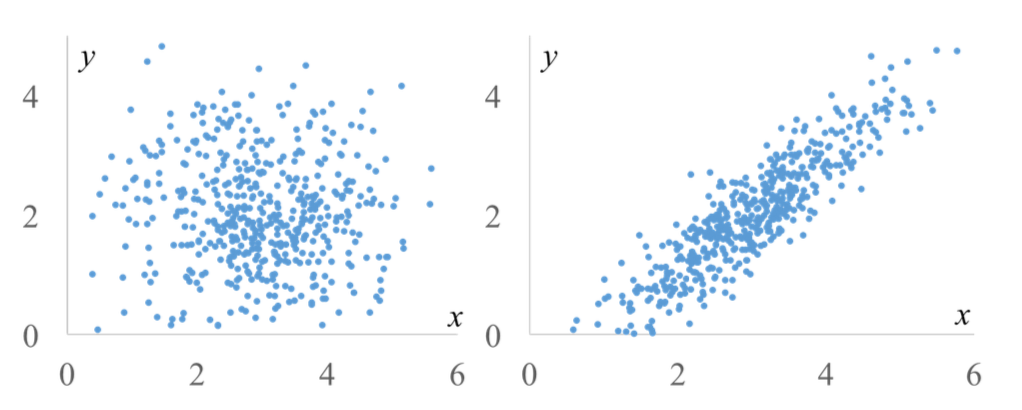

Covariance is a quantitative measure of the comparison of the change between one variable and its mean and the change between another variable and their mean.

So this is a measure of association between two variables.

If both $X$ and $Y$ are above (or below) their respective means, they we will have that $$Cov(X,Y) = E[(X - E[X])(Y - E[Y])]$$ is positive.

If either $X$ or $Y$ (but not both) is above (or below) its respective mean, then the covariance (same expression as above) will be negative.

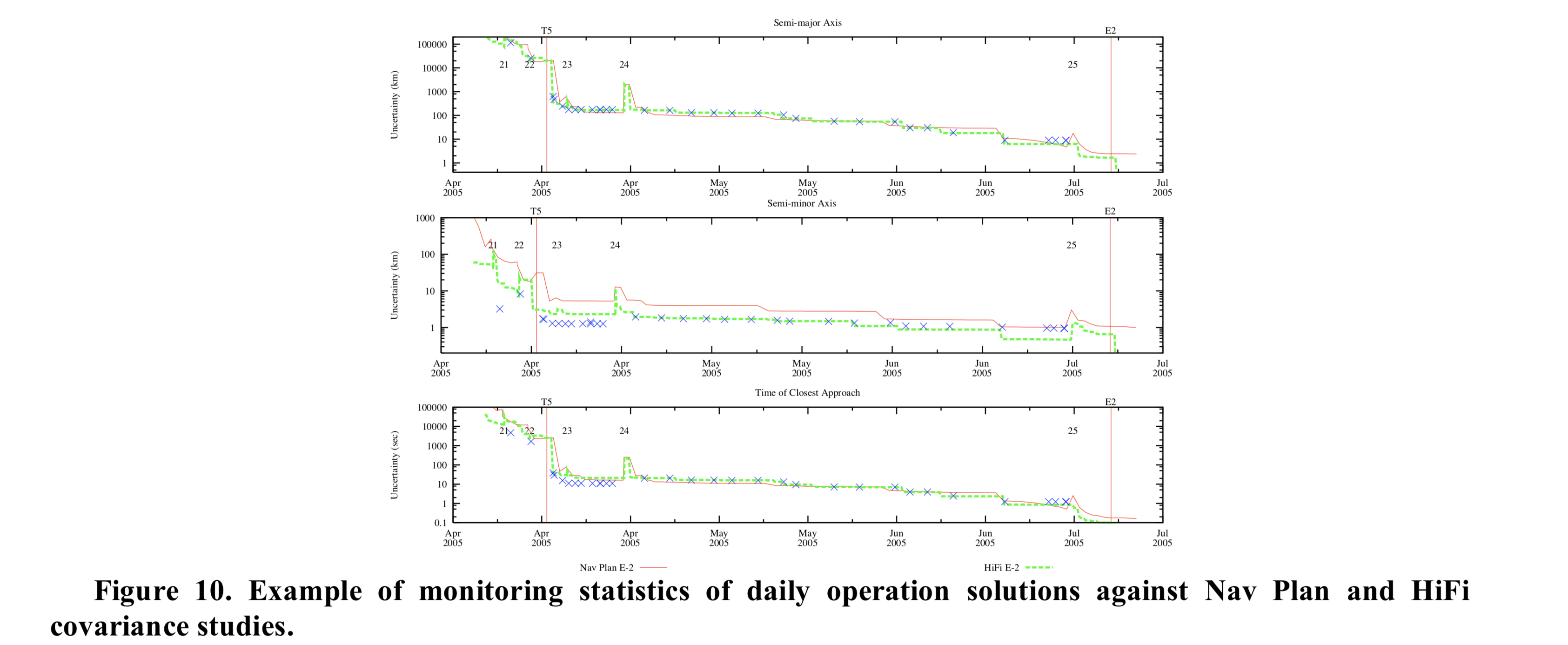

NASA cares about covariance

We often have multiple variables influencing the outcome of an experiment!

We can re-write the covariance formula as:

The Correlation Coefficient

For r.v.'s $X$ and $Y$, simply put is the ratio between their covariance and the product of their respective standard deviations.

$$ \rho(X,Y) = \frac{Cov(X,Y)}{\sqrt{Var(X)}\sqrt{Var(Y)}} $$

This is just a convenient normalization of the covariance done to extract the relevant information, for it gets rid of units and keeps the magnitude in the range [0,1].

Let's try exercises 4.50 and 4.52 together!